So here's the thing - building applications has always been a pain for me, especially when it came to getting them live. The old way I used to do things was super tedious: manually containerize the app, SSH into an EC2 server, and push everything by hand. This whole process was slow, boring, and honestly just didn't work well when projects got bigger.

That's when I learned about CI/CD pipelines. I thought to myself - this is exactly what I need to automate the entire production process and stop doing everything manually.

How The Whole Thing Works

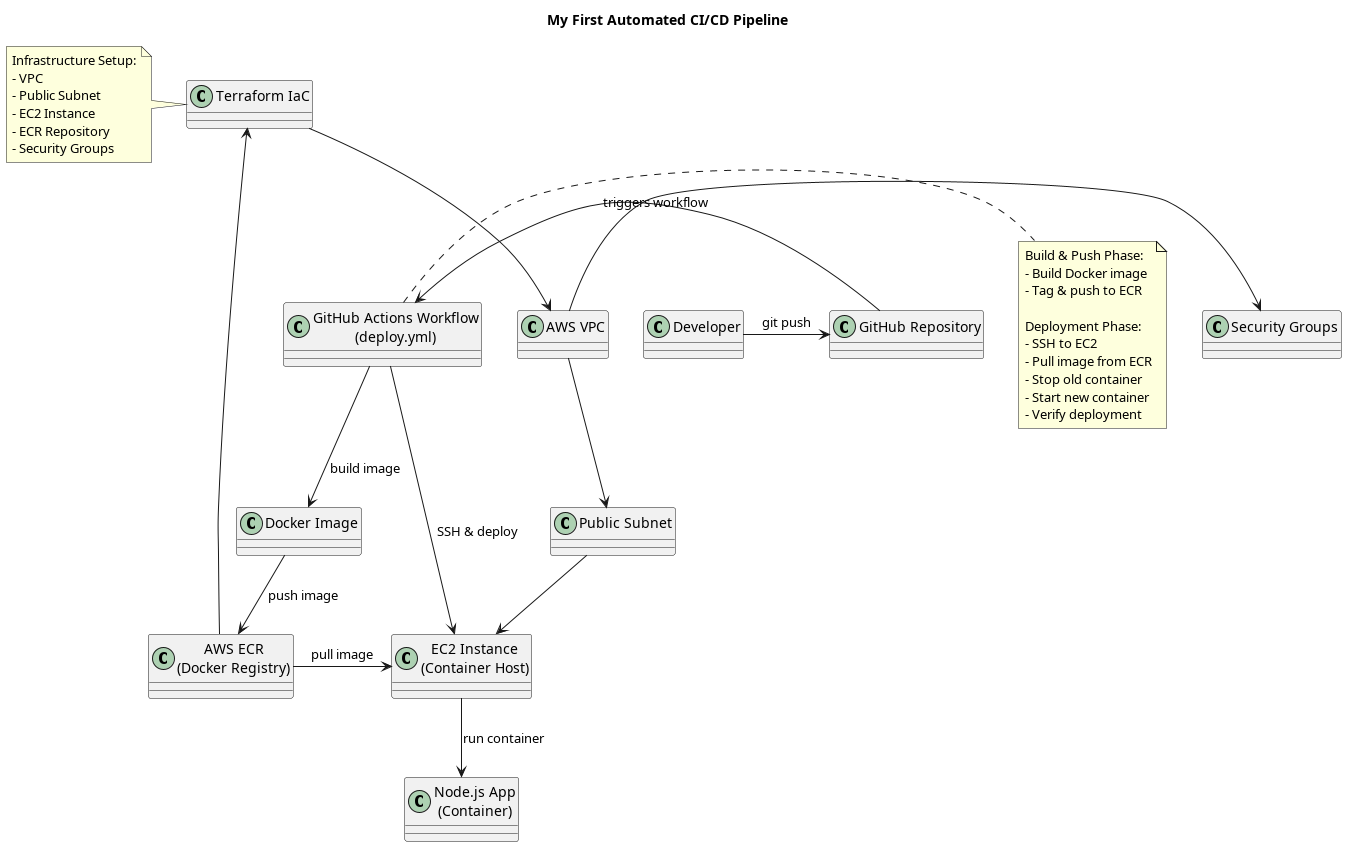

The pipeline I built is pretty straightforward. It all starts with a simple git push and ends with the app running in production. Let me walk you through how it all comes together.

Setting Up Infrastructure with Terraform

Before I could automate deployments, I needed to build a solid foundation. Instead of clicking around the AWS console (which honestly can be confusing), I used Terraform to define everything as code. This way, everything is repeatable and I can track changes.

Here's what Terraform sets up for me:

- VPC - Think of this as my private space on AWS

- Public subnet - Where I put things that need to talk to the internet

- EC2 instance - The server that actually runs my app

- ECR repository - A place to store my Docker images privately

- Security Groups - These act like bouncers, only letting the right traffic through

Packaging Everything with Docker

I package the Node.js app using Docker with a multi-stage build. This keeps the final image small and makes sure the app runs the same way everywhere - whether it's on my laptop or in production.

The Magic: GitHub Actions Workflow

This is where things get interesting. I have a workflow file called deploy.yml that does all the heavy lifting. Every time I push code to GitHub, it automatically:

Build and Push Step

- Takes the latest code and builds a new Docker image

- Tags it properly so I can track versions

- Pushes it to my private ECR repository

Deploy Step

- SSHs into the EC2 instance securely

- Pulls the new image from ECR

- Stops the old container

- Starts up the new container with the fresh code

- Makes sure everything deployed correctly

What I Got Out of This

This setup completely changed how I work. What used to be a manual process that took several minutes (and sometimes broke) now happens automatically in seconds after I push code.

The "Git Push to Production" pipeline eliminated all the tedious work and let me focus on actually building features instead of dealing with deployment headaches.

Using Infrastructure as Code, Docker, and automated CI/CD together made my whole development process way more reliable and much less stressful. Now when I want to deploy a new feature, I just push my code and watch it go live automatically.

Pretty cool, right?